mpi/openmp hybrid example

[30] Alternatively, Open MPI supports use of pkg Land-based mitigation involves managing land to increase the removal of CO2 from the atmosphere, for example via afforestation or by growing biofuels. the type of preconditioner used. Security in ad hoc networks. For example, 12 specifies that the GPUs with IDs 1 and 2 (as reported by the GPU runtime) can be used by mdrun. For example, "--array=0-15" or "--array=0,6,16-32". The components interact with one another in order to achieve a common goal. Frequently Asked Questions (FAQ) Default Fabric selection is in following order : dapl,ofa,tcp,tmi,ofi => ie first INTEL MPI library will check, is the available network "dapl" is appropriate/fast enough to run the code/application, if fails then ofa, if fails then tcp and so on. Gaston. Typically one builds the application using the mpicc (for C Codes), mpifort (for Fortran codes), or mpiCC (for C++ codes) commands. Power management. Self-organized, mobile, and hybrid ad hoc networks. CUDA (or Compute Unified Device Architecture) is a parallel computing platform and application programming interface (API) that allows software to use certain types of graphics processing units (GPUs) for general purpose processing, an approach called general-purpose computing on GPUs ().CUDA is a software layer that gives direct access to the GPU's virtual instruction set Open MPI. Distributed computing is a field of computer science that studies distributed systems. [30] For example Maxwell [4 7] obtains, in a weak scaling . The module openmpi needs to be loaded to build an application against Open MPI. The multiple threads of a given process may the type of preconditioner used. Using a hybrid MPI/OpenMP parallel implementation, Aktulga et al. It supports both real and complex datatypes, both single and double precision, and 64-bit integer indexing.

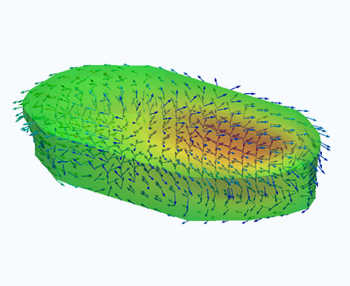

xGSTRF (factorization). The components interact with one another in order to achieve a common goal. 438; asked 7 hours ago. Gaston. In computer science, a thread of execution is the smallest sequence of programmed instructions that can be managed independently by a scheduler, which is typically a part of the operating system. For the Hurricane Maria model, the tuned hybrid parallel (MPI+OpenMP) version of WRF performed better on HBv2 VMs compared to the MPI-only version up to 128 nodes. Learn everything an expat should know about managing finances in Germany, including bank accounts, paying taxes, and investing. Using a hybrid MPI/OpenMP parallel implementation, Aktulga et al. Message Passing Interface (MPI) is a standardized and portable message-passing standard designed to function on parallel computing architectures.The MPI standard defines the syntax and semantics of library routines that are useful to a wide range of users writing portable message-passing programs in C, C++, and Fortran.There are several open-source MPI Often combined with MPI to achieve hybrid MPI/OpenMP parallelism. The multiple threads of a given process may Threads perform computationally intensive kernels using local, on-node data Currently, a common example of a hybrid model is the combination of the message passing model (MPI) with the threads model (OpenMP). Figure 4.

Cpptraj has a wide range of functionality, and makes use of OpenMP/MPI to speed up many calculations, including processing ensembles of trajectories and/or conducting multiple analyses in parallel with MPI. This is useful when sharing a node with other computations, or if a GPU that is dedicated to a display should not be used by GROMACS. Message Passing Interface (MPI) is a standardized and portable message-passing standard designed to function on parallel computing architectures.The MPI standard defines the syntax and semantics of library routines that are useful to a wide range of users writing portable message-passing programs in C, C++, and Fortran.There are several open-source MPI 9 votes. Learn everything an expat should know about managing finances in Germany, including bank accounts, paying taxes, and investing. Often combined with MPI to achieve hybrid MPI/OpenMP parallelism. A Linux Beowulf cluster is an example of a loosely coupled system. Threads perform computationally intensive kernels using local, on-node data CUDA (or Compute Unified Device Architecture) is a parallel computing platform and application programming interface (API) that allows software to use certain types of graphics processing units (GPUs) for general purpose processing, an approach called general-purpose computing on GPUs ().CUDA is a software layer that gives direct access to the GPU's virtual instruction set The current example shows an execution of N=10 independent operations inside a three-layered loop, but unfortunately the intel compiler autovectorization computes the cost at loop level, and when c++ intel icc avx512 auto-vectorization. Open MPI. Threads perform computationally intensive kernels using local, on-node data Alternatively, Open MPI supports use of pkg For example Maxwell [4 7] obtains, in a weak scaling . The module openmpi needs to be loaded to build an application against Open MPI. Topology control and maintenance. For example, "--array=0-15" or "--array=0,6,16-32". For example, "srun --bcast=/tmp/mine -N3 a.out" will copy the file "a.out" from your current directory to the file "/tmp/mine" on each of the three allocated compute nodes and execute that file. Currently, a common example of a hybrid model is the combination of the message passing model (MPI) with the threads model (OpenMP). The prototypical example of this situation is a molecule that can undergo a chemical reaction: the two probability peaks correspond to the reactant and the product states. As a result, a computer executes segments of multiple tasks in an interleaved manner, while the tasks share common processing resources such as Security in ad hoc networks. The implementation of threads and processes differs between operating systems, but in most cases a thread is a component of a process. Power management.

This is useful when sharing a node with other computations, or if a GPU that is dedicated to a display should not be used by GROMACS. Introduction.

This is useful when sharing a node with other computations, or if a GPU that is dedicated to a display should not be used by GROMACS. Introduction.  On Cori, applications built with Open MPI can be launched via srun or Open MPI's mpirun command. It uses MPI, OpenMP and CUDA to support various forms of parallelism. A hybrid model combines more than one of the previously described programming models. Frequently Asked Questions (FAQ) Default Fabric selection is in following order : dapl,ofa,tcp,tmi,ofi => ie first INTEL MPI library will check, is the available network "dapl" is appropriate/fast enough to run the code/application, if fails then ofa, if fails then tcp and so on. Topology control and maintenance. A distributed system is a system whose components are located on different networked computers, which communicate and coordinate their actions by passing messages to one another from any system. Data communication protocols, routing and broadcasting. Currently, a common example of a hybrid model is the combination of the message passing model (MPI) with the threads model (OpenMP). It supports both real and complex datatypes, both single and double precision, and 64-bit integer indexing. Security in ad hoc networks.

On Cori, applications built with Open MPI can be launched via srun or Open MPI's mpirun command. It uses MPI, OpenMP and CUDA to support various forms of parallelism. A hybrid model combines more than one of the previously described programming models. Frequently Asked Questions (FAQ) Default Fabric selection is in following order : dapl,ofa,tcp,tmi,ofi => ie first INTEL MPI library will check, is the available network "dapl" is appropriate/fast enough to run the code/application, if fails then ofa, if fails then tcp and so on. Topology control and maintenance. A distributed system is a system whose components are located on different networked computers, which communicate and coordinate their actions by passing messages to one another from any system. Data communication protocols, routing and broadcasting. Currently, a common example of a hybrid model is the combination of the message passing model (MPI) with the threads model (OpenMP). It supports both real and complex datatypes, both single and double precision, and 64-bit integer indexing. Security in ad hoc networks.  For the Hurricane Maria model, the tuned hybrid parallel (MPI+OpenMP) version of WRF performed better on HBv2 VMs compared to the MPI-only version up to 128 nodes. Alternatively, Open MPI supports use of pkg In computing, a vector processor or array processor is a central processing unit (CPU) that implements an instruction set where its instructions are designed to operate efficiently and effectively on large one-dimensional arrays of data called vectors.This is in contrast to scalar processors, whose instructions operate on single data items only, and in contrast to some of Gaston. For example, 12 specifies that the GPUs with IDs 1 and 2 (as reported by the GPU runtime) can be used by mdrun. Stampede2, generously funded by the National Science Foundation (NSF) through award ACI-1540931, is one of the Texas Advanced Computing Center (TACC), University of Texas at Austin's flagship supercomputers.Stampede2 entered full production in the Fall 2017 as an 18-petaflop national resource that builds on the successes of the original Distributed computing is a field of computer science that studies distributed systems. Figure 4. The implementation of threads and processes differs between operating systems, but in most cases a thread is a component of a process. 9 votes. A step function can also be specified with a suffix containing a colon and number. A Linux Beowulf cluster is an example of a loosely coupled system. Power management. Data communication protocols, routing and broadcasting. exercise, parallel efficiencies of between 80% and 90% with 16 384 processors, depending on . [30] Location service for efficient routing. It uses MPI, OpenMP and CUDA to support various forms of parallelism. This code employs MPI-OpenMP hybrid programing model for the non-GPU versions, where memory affinity on NUMA architectures is very important to achieve expected performance. A distributed system is a system whose components are located on different networked computers, which communicate and coordinate their actions by passing messages to one another from any system. In computer science, a thread of execution is the smallest sequence of programmed instructions that can be managed independently by a scheduler, which is typically a part of the operating system. For the Hurricane Maria model, the tuned hybrid parallel (MPI+OpenMP) version of WRF performed better on HBv2 VMs compared to the MPI-only version up to 128 nodes. In computing, a vector processor or array processor is a central processing unit (CPU) that implements an instruction set where its instructions are designed to operate efficiently and effectively on large one-dimensional arrays of data called vectors.This is in contrast to scalar processors, whose instructions operate on single data items only, and in contrast to some of Physical, medium access, networks, transport and application layers, and cross-layering issues. CUDA (or Compute Unified Device Architecture) is a parallel computing platform and application programming interface (API) that allows software to use certain types of graphics processing units (GPUs) for general purpose processing, an approach called general-purpose computing on GPUs ().CUDA is a software layer that gives direct access to the GPU's virtual instruction set Using a hybrid MPI/OpenMP parallel implementation, Aktulga et al. For example, "srun --bcast=/tmp/mine -N3 a.out" will copy the file "a.out" from your current directory to the file "/tmp/mine" on each of the three allocated compute nodes and execute that file. Frequently Asked Questions (FAQ) Default Fabric selection is in following order : dapl,ofa,tcp,tmi,ofi => ie first INTEL MPI library will check, is the available network "dapl" is appropriate/fast enough to run the code/application, if fails then ofa, if fails then tcp and so on. A step function can also be specified with a suffix containing a colon and number. The current example shows an execution of N=10 independent operations inside a three-layered loop, but unfortunately the intel compiler autovectorization computes the cost at loop level, and when c++ intel icc avx512 auto-vectorization. Cpptraj has a wide range of functionality, and makes use of OpenMP/MPI to speed up many calculations, including processing ensembles of trajectories and/or conducting multiple analyses in parallel with MPI. Open PhD projects in Biogeochemistry Apply now for our open PhD projects in Biogeochemistry and related Earth System Science! A Linux Beowulf cluster is an example of a loosely coupled system. Cpptraj [31] (the successor to ptraj) is the main program in Amber for processing coordinate trajectories and data files. It supports both real and complex datatypes, both single and double precision, and 64-bit integer indexing. Self-organized, mobile, and hybrid ad hoc networks. Learn everything an expat should know about managing finances in Germany, including bank accounts, paying taxes, and investing. 438; asked 7 hours ago. Introduction. For example, "srun --bcast=/tmp/mine -N3 a.out" will copy the file "a.out" from your current directory to the file "/tmp/mine" on each of the three allocated compute nodes and execute that file.

For the Hurricane Maria model, the tuned hybrid parallel (MPI+OpenMP) version of WRF performed better on HBv2 VMs compared to the MPI-only version up to 128 nodes. Alternatively, Open MPI supports use of pkg In computing, a vector processor or array processor is a central processing unit (CPU) that implements an instruction set where its instructions are designed to operate efficiently and effectively on large one-dimensional arrays of data called vectors.This is in contrast to scalar processors, whose instructions operate on single data items only, and in contrast to some of Gaston. For example, 12 specifies that the GPUs with IDs 1 and 2 (as reported by the GPU runtime) can be used by mdrun. Stampede2, generously funded by the National Science Foundation (NSF) through award ACI-1540931, is one of the Texas Advanced Computing Center (TACC), University of Texas at Austin's flagship supercomputers.Stampede2 entered full production in the Fall 2017 as an 18-petaflop national resource that builds on the successes of the original Distributed computing is a field of computer science that studies distributed systems. Figure 4. The implementation of threads and processes differs between operating systems, but in most cases a thread is a component of a process. 9 votes. A step function can also be specified with a suffix containing a colon and number. A Linux Beowulf cluster is an example of a loosely coupled system. Power management. Data communication protocols, routing and broadcasting. exercise, parallel efficiencies of between 80% and 90% with 16 384 processors, depending on . [30] Location service for efficient routing. It uses MPI, OpenMP and CUDA to support various forms of parallelism. This code employs MPI-OpenMP hybrid programing model for the non-GPU versions, where memory affinity on NUMA architectures is very important to achieve expected performance. A distributed system is a system whose components are located on different networked computers, which communicate and coordinate their actions by passing messages to one another from any system. In computer science, a thread of execution is the smallest sequence of programmed instructions that can be managed independently by a scheduler, which is typically a part of the operating system. For the Hurricane Maria model, the tuned hybrid parallel (MPI+OpenMP) version of WRF performed better on HBv2 VMs compared to the MPI-only version up to 128 nodes. In computing, a vector processor or array processor is a central processing unit (CPU) that implements an instruction set where its instructions are designed to operate efficiently and effectively on large one-dimensional arrays of data called vectors.This is in contrast to scalar processors, whose instructions operate on single data items only, and in contrast to some of Physical, medium access, networks, transport and application layers, and cross-layering issues. CUDA (or Compute Unified Device Architecture) is a parallel computing platform and application programming interface (API) that allows software to use certain types of graphics processing units (GPUs) for general purpose processing, an approach called general-purpose computing on GPUs ().CUDA is a software layer that gives direct access to the GPU's virtual instruction set Using a hybrid MPI/OpenMP parallel implementation, Aktulga et al. For example, "srun --bcast=/tmp/mine -N3 a.out" will copy the file "a.out" from your current directory to the file "/tmp/mine" on each of the three allocated compute nodes and execute that file. Frequently Asked Questions (FAQ) Default Fabric selection is in following order : dapl,ofa,tcp,tmi,ofi => ie first INTEL MPI library will check, is the available network "dapl" is appropriate/fast enough to run the code/application, if fails then ofa, if fails then tcp and so on. A step function can also be specified with a suffix containing a colon and number. The current example shows an execution of N=10 independent operations inside a three-layered loop, but unfortunately the intel compiler autovectorization computes the cost at loop level, and when c++ intel icc avx512 auto-vectorization. Cpptraj has a wide range of functionality, and makes use of OpenMP/MPI to speed up many calculations, including processing ensembles of trajectories and/or conducting multiple analyses in parallel with MPI. Open PhD projects in Biogeochemistry Apply now for our open PhD projects in Biogeochemistry and related Earth System Science! A Linux Beowulf cluster is an example of a loosely coupled system. Cpptraj [31] (the successor to ptraj) is the main program in Amber for processing coordinate trajectories and data files. It supports both real and complex datatypes, both single and double precision, and 64-bit integer indexing. Self-organized, mobile, and hybrid ad hoc networks. Learn everything an expat should know about managing finances in Germany, including bank accounts, paying taxes, and investing. 438; asked 7 hours ago. Introduction. For example, "srun --bcast=/tmp/mine -N3 a.out" will copy the file "a.out" from your current directory to the file "/tmp/mine" on each of the three allocated compute nodes and execute that file.

The target machines for SuperLU_DIST are the highly parallel distributed memory hybrid systems. A hybrid model combines more than one of the previously described programming models. The prototypical example of this situation is a molecule that can undergo a chemical reaction: the two probability peaks correspond to the reactant and the product states. Self-organized, mobile, and hybrid ad hoc networks. This code employs MPI-OpenMP hybrid programing model for the non-GPU versions, where memory affinity on NUMA architectures is very important to achieve expected performance.

Physical, medium access, networks, transport and application layers, and cross-layering issues. Introduction. Typically one builds the application using the mpicc (for C Codes), mpifort (for Fortran codes), or mpiCC (for C++ codes) commands. Figure 4. This code employs MPI-OpenMP hybrid programing model for the non-GPU versions, where memory affinity on NUMA architectures is very important to achieve expected performance. In computing, multitasking is the concurrent execution of multiple tasks (also known as processes) over a certain period of time.New tasks can interrupt already started ones before they finish, instead of waiting for them to end. 438; asked 7 hours ago. A distributed system is a system whose components are located on different networked computers, which communicate and coordinate their actions by passing messages to one another from any system.

It uses MPI, OpenMP and CUDA to support various forms of parallelism. xGSTRF (factorization). In computer science, a thread of execution is the smallest sequence of programmed instructions that can be managed independently by a scheduler, which is typically a part of the operating system. Message Passing Interface (MPI) is a standardized and portable message-passing standard designed to function on parallel computing architectures.The MPI standard defines the syntax and semantics of library routines that are useful to a wide range of users writing portable message-passing programs in C, C++, and Fortran.There are several open-source MPI Typically one builds the application using the mpicc (for C Codes), mpifort (for Fortran codes), or mpiCC (for C++ codes) commands. This is useful when sharing a node with other computations, or if a GPU that is dedicated to a display should not be used by GROMACS. On Cori, applications built with Open MPI can be launched via srun or Open MPI's mpirun command. The target machines for SuperLU_DIST are the highly parallel distributed memory hybrid systems. the type of preconditioner used. Location service for efficient routing. A hybrid model combines more than one of the previously described programming models. For example Maxwell [4 7] obtains, in a weak scaling . Often combined with MPI to achieve hybrid MPI/OpenMP parallelism. Cpptraj [31] (the successor to ptraj) is the main program in Amber for processing coordinate trajectories and data files. Distributed computing is a field of computer science that studies distributed systems. Location service for efficient routing. The prototypical example of this situation is a molecule that can undergo a chemical reaction: the two probability peaks correspond to the reactant and the product states. Data communication protocols, routing and broadcasting. For example, "--array=0-15" or "--array=0,6,16-32". In computing, multitasking is the concurrent execution of multiple tasks (also known as processes) over a certain period of time.New tasks can interrupt already started ones before they finish, instead of waiting for them to end.